Gemma2 9B Prompt Template

Gemma2 9B Prompt Template - You can also use a prompt template specifying the format in which gemma responds to your prompt like this: Gemma 2 is google's latest iteration of open llms. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Choose the 'google gemma instruct' preset in your. This expanded dataset, primarily consisting of web data (mostly english), code, and mathematics, contributes to the models'. You can do this by creating a runtime template with the n1 machine series and an attached nvidia tesla t4 accelerator — the documentation has detailed steps. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Choose the 'google gemma instruct' preset in your. You can follow this format to build the prompt manually, if you need to do it. Trained on 8 trillion tokens. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Gemma 2 is google's latest iteration of open llms. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. You can also use a prompt template specifying the format in which gemma responds to your prompt like this: You can do this by creating a runtime template with the n1 machine series and an attached nvidia tesla t4 accelerator — the documentation has detailed steps. Trained on 8 trillion tokens. You can follow this format to build the prompt manually, if you need to do it. Choose the 'google gemma instruct' preset in your. This expanded dataset, primarily consisting of web data (mostly english), code, and mathematics, contributes to the models'. Choose the 'google gemma instruct' preset in your. You can do this by creating a runtime template with the n1 machine series and an attached nvidia tesla t4 accelerator — the documentation has detailed steps. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. At only 9b parameters, this is a great size for those with. You can also use a prompt template specifying the format in which gemma responds to your prompt like this: At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Trained on 8 trillion tokens. This expanded dataset, primarily consisting of web data (mostly english), code, and mathematics, contributes to. You can also use a prompt template specifying the format in which gemma responds to your prompt like this: Choose the 'google gemma instruct' preset in your. Gemma 2 is google's latest iteration of open llms. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Choose the 'google. You can follow this format to build the prompt manually, if you need to do it. This expanded dataset, primarily consisting of web data (mostly english), code, and mathematics, contributes to the models'. Trained on 8 trillion tokens. Gemma 2 is google's latest iteration of open llms. Choose the 'google gemma instruct' preset in your. You can also use a prompt template specifying the format in which gemma responds to your prompt like this: Choose the 'google gemma instruct' preset in your. Gemma 2 is google's latest iteration of open llms. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Choose the 'google. Gemma 2 is google's latest iteration of open llms. Choose the 'google gemma instruct' preset in your. You can do this by creating a runtime template with the n1 machine series and an attached nvidia tesla t4 accelerator — the documentation has detailed steps. You can also use a prompt template specifying the format in which gemma responds to your. This expanded dataset, primarily consisting of web data (mostly english), code, and mathematics, contributes to the models'. Choose the 'google gemma instruct' preset in your. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Trained on 8 trillion tokens. At only 9b parameters, this is a great size. You can follow this format to build the prompt manually, if you need to do it. Gemma 2 is google's latest iteration of open llms. Choose the 'google gemma instruct' preset in your. Trained on 8 trillion tokens. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Choose the 'google gemma instruct' preset in your. This expanded dataset, primarily consisting of web data (mostly english), code, and mathematics, contributes to the models'. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Gemma 2 is google's latest iteration of open llms. You can do this by. Trained on 8 trillion tokens. Choose the 'google gemma instruct' preset in your. You can also use a prompt template specifying the format in which gemma responds to your prompt like this: Gemma 2 is google's latest iteration of open llms. You can follow this format to build the prompt manually, if you need to do it. Choose the 'google gemma instruct' preset in your. At only 9b parameters, this is a great size for those with limited vram or ram, while still performing very well. Trained on 8 trillion tokens. You can do this by creating a runtime template with the n1 machine series and an attached nvidia tesla t4 accelerator — the documentation has detailed steps. This expanded dataset, primarily consisting of web data (mostly english), code, and mathematics, contributes to the models'. You can follow this format to build the prompt manually, if you need to do it. Choose the 'google gemma instruct' preset in your. You can also use a prompt template specifying the format in which gemma responds to your prompt like this:Gemma2 9B IT CoT Prompts Test YouTube

gemma29b

Llama 3.1 8b VS Gemma 2 9b (Coding, Logic & Reasoning, Math)

Google, Gemma2 모델군(9B&27B) 공개 읽을거리&정보공유 파이토치 한국 사용자 모임

Gemma2 9B for free is Mission Possible! by Fabio Matricardi Medium

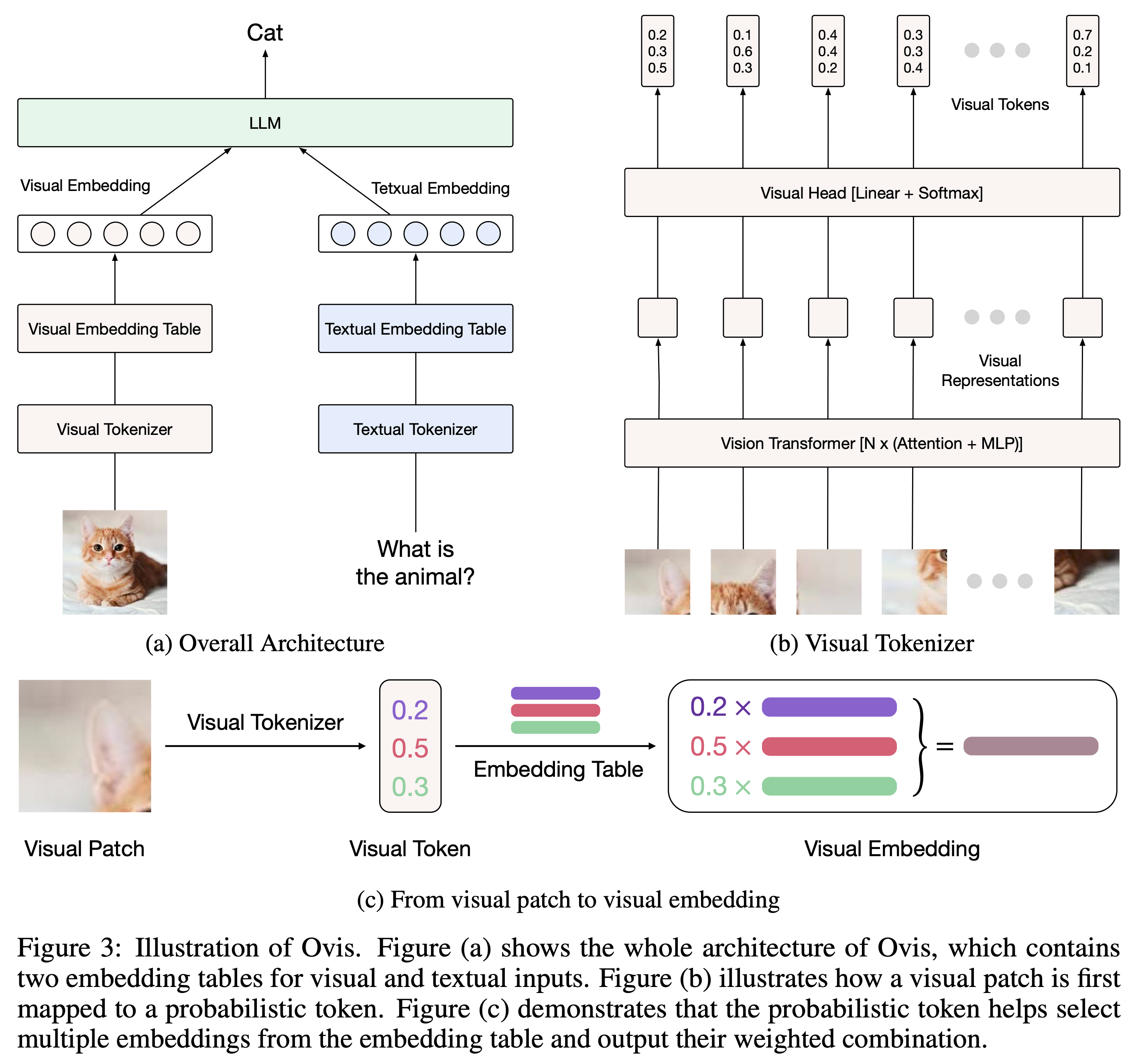

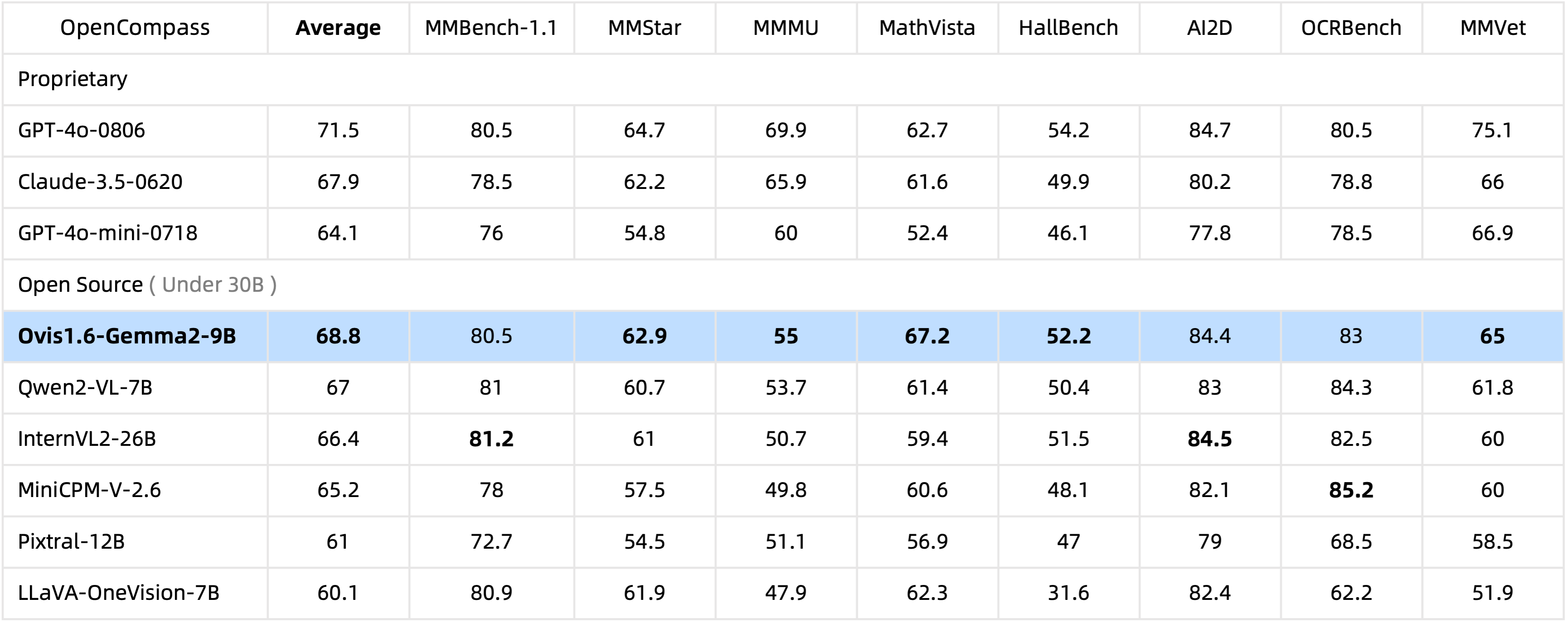

AIDCAI/Ovis1.6Gemma29B · Hugging Face

gmonsoon/gemma29bcptsahabataiv1instructGGUF · Hugging Face

Getting Started with Gemma29B

AIDCAI/Ovis1.6Gemma29BGPTQInt4 · Hugging Face

Ovis1.6Gemma29Bを試す

At Only 9B Parameters, This Is A Great Size For Those With Limited Vram Or Ram, While Still Performing Very Well.

Gemma 2 Is Google's Latest Iteration Of Open Llms.

Related Post: