Llama 3 Prompt Template

Llama 3 Prompt Template - This is the current template that works for the other llms i am using. When you receive a tool call response, use the output to format an answer to the orginal. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. (system, given an input question, convert it. The following prompts provide an example of how custom tools can be called from the output of the model. For many cases where an application is using a hugging face (hf) variant of the llama 3 model, the upgrade path to llama 3.1 should be straightforward. These prompts can be questions, statements, or commands that instruct the model on what. It signals the end of the { {assistant_message}} by generating the <|eot_id|>. The following prompts provide an example of how custom tools can be called from the output. Explicitly apply llama 3.1 prompt template using the model tokenizer this example is based on the model card from the meta documentation and some tutorials which. Llama 3.1 nemoguard 8b topiccontrol nim performs input moderation, such as ensuring that the user prompt is consistent with rules specified as part of the system prompt. They are useful for making personalized bots or integrating llama 3 into. Following this prompt, llama 3 completes it by generating the { {assistant_message}}. This page covers capabilities and guidance specific to the models released with llama 3.2: However i want to get this system working with a llama3. Learn best practices for prompting and selecting among meta llama 2 & 3 models. Llama 3 template — special tokens. Explicitly apply llama 3.1 prompt template using the model tokenizer this example is based on the model card from the meta documentation and some tutorials which. Llama 3.1 prompts are the inputs you provide to the llama 3.1 model to elicit specific responses. This is the current template that works for the other llms i am using. The following prompts provide an example of how custom tools can be called from the output of the model. Explicitly apply llama 3.1 prompt template using the model tokenizer this example is based on the model card from the meta documentation and some tutorials which. Ai is the new electricity and will. They are useful for making personalized bots or. These prompts can be questions, statements, or commands that instruct the model on what. The following prompts provide an example of how custom tools can be called from the output of the model. Llama models can now output custom tool calls from a single message to allow easier tool calling. It signals the end of the { {assistant_message}} by generating. Llama 3 template — special tokens. The following prompts provide an example of how custom tools can be called from the output of the model. The following prompts provide an example of how custom tools can be called from the output. This is the current template that works for the other llms i am using. These prompts can be questions,. When you receive a tool call response, use the output to format an answer to the orginal. Llama models can now output custom tool calls from a single message to allow easier tool calling. Explicitly apply llama 3.1 prompt template using the model tokenizer this example is based on the model card from the meta documentation and some tutorials which.. Learn best practices for prompting and selecting among meta llama 2 & 3 models. These prompts can be questions, statements, or commands that instruct the model on what. The following prompts provide an example of how custom tools can be called from the output of the model. It's important to note that the model itself does not execute the calls;. The following prompts provide an example of how custom tools can be called from the output of the model. However i want to get this system working with a llama3. When you're trying a new model, it's a good idea to review the model card on hugging face to understand what (if any) system prompt template it uses. Learn best. They are useful for making personalized bots or integrating llama 3 into. The following prompts provide an example of how custom tools can be called from the output. (system, given an input question, convert it. Llama 3.1 nemoguard 8b topiccontrol nim performs input moderation, such as ensuring that the user prompt is consistent with rules specified as part of the. This can be used as a template to. It signals the end of the { {assistant_message}} by generating the <|eot_id|>. Llama 3.1 prompts are the inputs you provide to the llama 3.1 model to elicit specific responses. Interact with meta llama 2 chat, code llama, and llama guard models. This page covers capabilities and guidance specific to the models released. Llama 3.1 nemoguard 8b topiccontrol nim performs input moderation, such as ensuring that the user prompt is consistent with rules specified as part of the system prompt. These prompts can be questions, statements, or commands that instruct the model on what. Interact with meta llama 2 chat, code llama, and llama guard models. The llama 3.2 quantized models (1b/3b), the. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. Llama 3.1 nemoguard 8b topiccontrol nim performs input moderation, such as ensuring that the user prompt is consistent with rules specified as part of the system prompt. Ai is the new electricity and will. Explicitly apply llama 3.1 prompt template using the model tokenizer this. When you receive a tool call response, use the output to format an answer to the orginal. For many cases where an application is using a hugging face (hf) variant of the llama 3 model, the upgrade path to llama 3.1 should be straightforward. It's important to note that the model itself does not execute the calls; The following prompts provide an example of how custom tools can be called from the output of the model. Following this prompt, llama 3 completes it by generating the { {assistant_message}}. It signals the end of the { {assistant_message}} by generating the <|eot_id|>. This is the current template that works for the other llms i am using. This page covers capabilities and guidance specific to the models released with llama 3.2: The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. They are useful for making personalized bots or integrating llama 3 into. Learn best practices for prompting and selecting among meta llama 2 & 3 models. Ai is the new electricity and will. The following prompts provide an example of how custom tools can be called from the output. Explicitly apply llama 3.1 prompt template using the model tokenizer this example is based on the model card from the meta documentation and some tutorials which. Llama 3.1 nemoguard 8b topiccontrol nim performs input moderation, such as ensuring that the user prompt is consistent with rules specified as part of the system prompt. Llama 3 template — special tokens.rag_gt_prompt_template.jinja · AgentPublic/llama3instruct

Llama 3 Prompt Template

Llama 3 Prompt Template Printable Word Searches

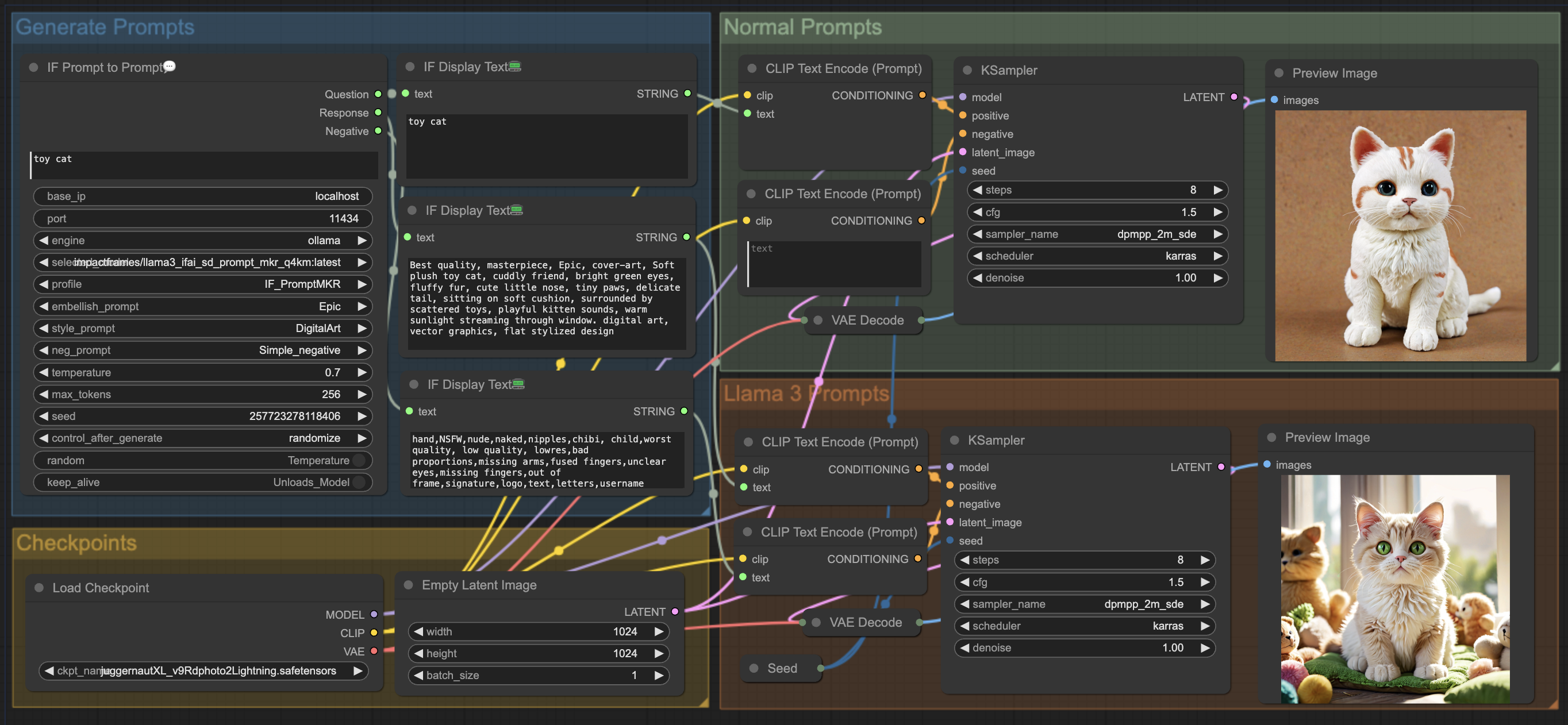

使用 Llama 3 來生成 Prompts

Try These 20 Llama 3 Prompts & Boost Your Productivity At Work

Try These 20 Llama 3 Prompts & Boost Your Productivity At Work

Write Llama 3 prompts like a pro Cognitive Class

Try These 20 Llama 3 Prompts & Boost Your Productivity At Work

metallama/MetaLlama38BInstruct · What is the conversation template?

· Prompt Template example

(System, Given An Input Question, Convert It.

Changes To The Prompt Format.

When You're Trying A New Model, It's A Good Idea To Review The Model Card On Hugging Face To Understand What (If Any) System Prompt Template It Uses.

Interact With Meta Llama 2 Chat, Code Llama, And Llama Guard Models.

Related Post: