Llama 31 8B Instruct Template Ooba

Llama 31 8B Instruct Template Ooba - I tried my best to piece together correct prompt template (i originally included links to sources but reddit did not like the lings for some reason). I still get answers like this: When you receive a tool call response, use the output to format an answer to the orginal. I have it up and running with a front end. How do i specify the chat template and format the api calls. Use with transformers you can run. You don't touch the instruction template at all, because the model loader. Llama is a large language model developed by. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. When you receive a tool call response, use the output to format an answer to the orginal. Currently i managed to run it but when answering it falls into endless loop until. This page covers capabilities and guidance specific to the models released with llama 3.2: How do i use custom llm templates with the api? How do i specify the chat template and format the api calls. When you receive a tool call response, use the output to format an answer to the orginal. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. I still get answers like this: Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. Llama 3 instruct special tokens used with llama 3. Llama 3 instruct special tokens used with llama 3. How do i specify the chat template and format the api calls. I wrote the following instruction template which. I still get answers like this: How do i use custom llm templates with the api? Use with transformers you can run. I tried my best to piece together correct prompt template (i originally included links to sources but reddit did not like the lings for some reason). I still get answers like this: You don't touch the instruction template at all, because the model loader. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. When you receive a tool call response, use the output to format an answer to the orginal. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. I tried my best to piece together correct prompt template (i originally included links to sources but reddit did not like the lings for some reason). When you receive a tool call response,. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. I tried my best to piece together correct prompt template (i originally included links to sources but reddit did not like the lings for some reason). When you receive a tool call response, use the output to format an answer to the orginal. A prompt. I have it up and running with a front end. Llama is a large language model developed by. How do i use custom llm templates with the api? How do i specify the chat template and format the api calls. Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: This page covers capabilities and guidance specific to the models released with llama 3.2: Currently i managed to run it but when answering it falls into endless loop until. You don't touch the instruction template at all, because the model loader. I have it up and running with a front end. A prompt should contain a single system message, can. When you receive a tool call response, use the output to format an answer to the orginal. Use with transformers you can run. When you receive a tool call response, use the output to format an answer to the orginal. Llama 3.1 comes in three sizes: I wrote the following instruction template which. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. Use with transformers you can run. Llama 3.1 comes in three sizes: Llama 3 instruct special tokens used with llama 3. I tried my best to piece together correct prompt template (i originally included links to sources but reddit did not like the lings for some reason). When you receive a tool call response, use the output to format an answer to the orginal. I still get answers like this: I have it up and running with a front end. You don't touch the instruction template at all, because the model loader. How do i specify the chat template and format the api calls. When you receive a tool call response, use the output to format an answer to the orginal. This page covers capabilities and guidance specific to the models released with llama 3.2: Llama 3 instruct special tokens used with llama 3. Llama is a large language model developed by. I have it up and running with a front end. I wrote the following instruction template which. How do i specify the chat template and format the api calls. This page covers capabilities and guidance specific to the models released with llama 3.2: When you receive a tool call response, use the output to format an answer to the orginal. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. I have it up and running with a front end. I still get answers like this: The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. Llama 3 instruct special tokens used with llama 3. When you receive a tool call response, use the output to format an answer to the orginal. Llama is a large language model developed by. Currently i managed to run it but when answering it falls into endless loop until. When you receive a tool call response, use the output to format an answer to the orginal. Llama 3.1 comes in three sizes: You don't touch the instruction template at all, because the model loader. Use with transformers you can run.Meta Llama 3.1 8B Instruct By metallama Benchmarks, Features and

metallama/MetaLlama38BInstruct · Where can I get a config.json

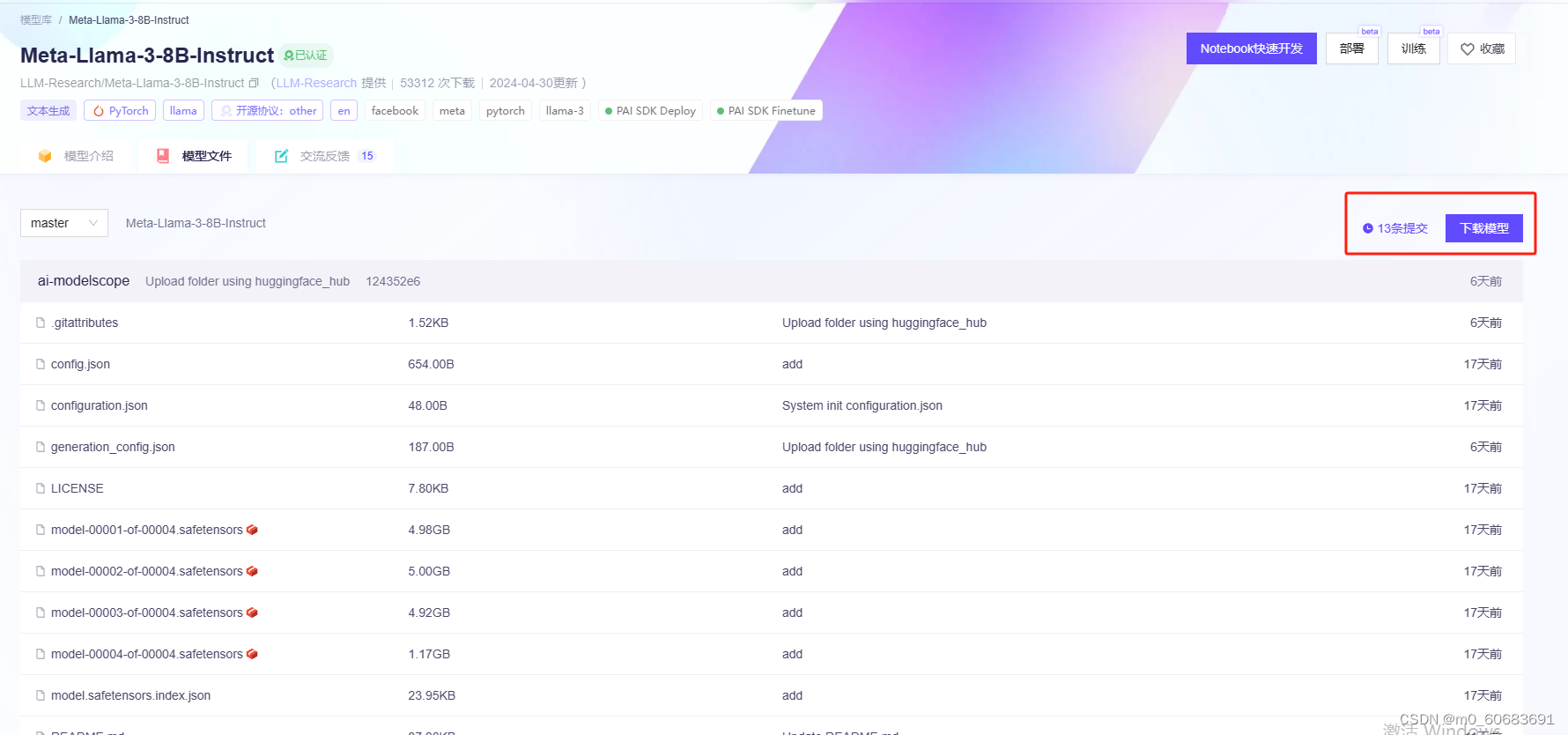

anguia001/MetaLlama38BInstruct at main

Llama 3 8B Instruct Model library

教程:利用LLaMA_Factory微调llama38b大模型_llama3模型微调保存_llama38binstruct下载CSDN博客

META LLAMA 3 8B INSTRUCT LLM How to Create Medical Chatbot with

Junrulu/Llama38BInstructIterativeSamPO · Hugging Face

Llama 3 Swallow 8B Instruct V0.1 a Hugging Face Space by alfredplpl

unsloth/llama38bInstructbnb4bit · Hugging Face

Manage Access models/llama38binstruct

How Do I Use Custom Llm Templates With The Api?

Putting <|Eot_Id|>, <|End_Of_Text|> In Custom Stopping Strings Doesn't Change Anything.

Here's Instructions For Anybody Else Who Needs To Set The Instruction Template Correctly In Oobabooga:

I Tried My Best To Piece Together Correct Prompt Template (I Originally Included Links To Sources But Reddit Did Not Like The Lings For Some Reason).

Related Post: